A source code's (an application) destination is to get deployed to a target environment (eg: dev, uat, integration, staging, prod etc) so that the end users can consume it. (that's a no brainer).

The source to production path can be implemented using different ways with different tools typically following a concept called CI and CD and then adding other terminologies such as DevOps, DevSecOps, Pipelines, Supply Chains, Orchestrations, Choreography etc etc. And these are not unnecessary. As the dynamics of modern applications are shifting from monolith to service oriented (and/or microservices) so is evolving the tech layers for defining CI and CD.

In this post I will describe my views on some of the draw backs I have found in tradition pipelines and how the concept of Supply Chain (its the new thing) can resolve it.

Table of contents:

Concepts for path to production:

So lets start from the beginning (and think of things in conceptual terms first)

Below are few things that typically happen to an application source code in order for it to become usable or consumable by the its users:

- it is pushed to a source repository (most likely git)

- some stuffs happens to it to transform it into a runnable/executable thing compatible with the target environment

- some more stuffs happens to deliver it (the transformed thingy) to a target environment

- The target environment is configured with that transformed thingy so that the users can access it.

In technical terms (assume the application source code is java and target environment is K8s) below are usually the scenarios followed for Dev path (source to Dev environment):

- it is pushed to a source repository (most likely git)

- CI processes happen that comprise of the below

- Execute the tests cases

- Compile the source codes using maven and make a jar or war file

- Containerise the jar or war file and make it an OCI image.

- Produce a "deliverable" aka deployment definition (helm chart, yaml etc) and write/push it into a git repository (for git-ops operations)

- CD processes happen that comprise of the below:

- Get the "deliverable" config or deployment definition from git repository.

- Perform deployment on the target environment.

Let's add security components in the CI and CD processes for staging/prod scenario:

Add to CI:

- Scan source code and its dependencies for vulnerabilities, if vulnerabilities found then raise error and break.

- Scan produced OCI image for vulnerabilities, if vulnerabilities found then raise error and break.

- Sign the produced OCI.

Add to CD:

- Perform signature checks

Naturally, these steps need to be automated (cause, no point of manually executing these steps for 20 - 30 applications and somewhat that's impractical too)

Pipeline:

Traditionally to automate we write code to define something called "pipeline" (really, a form of IaC) and use "pipeline" implementation tools such as Jenkins, AZDevOps, Circle etc to orchestrate the above steps in order. (pipeline functionality is also offered by GitHub, BitBucket etc -- but these are still pipelines). The pipeline IaC then gets added to another git repository or in source code repository.

We can visualise it like the below diagram:

Here's a screen shot of a simple repository:

Pipeline drawbacks:

In my opinion, below are few drawbacks of pipeline that hinder the speed, scalability and security of the software supply process:

Deployment configs: These are infra definitions and commonly get added to same repository with source code. Issues are: how many files (how many lines), who is supplying it, who is creating it and managing it? How do you ensure that a yaml file is created such that it is adhering the practice of governance and security every time there a new environment or new source code? When there’re multiple applications and multiple ownerships scenario it leads to unnecessary people and process complexity.

Modularity and Reusability: This is a big one. If the tasks in the CI and CD processes are not modular and re-usable then changing just one component becomes a big issue; even if it is a small change. For example: use Trivy instead of Grype for scanning. Arguably, Master templates will make it a bit manageable but that also have a tendency to go out of control at scale.

Files handoff: Handoffs of files for different pipelines in an automated scenario is an anti-pattern. This also leads to choke points in the Ops processes and hinders speed, scale and security.

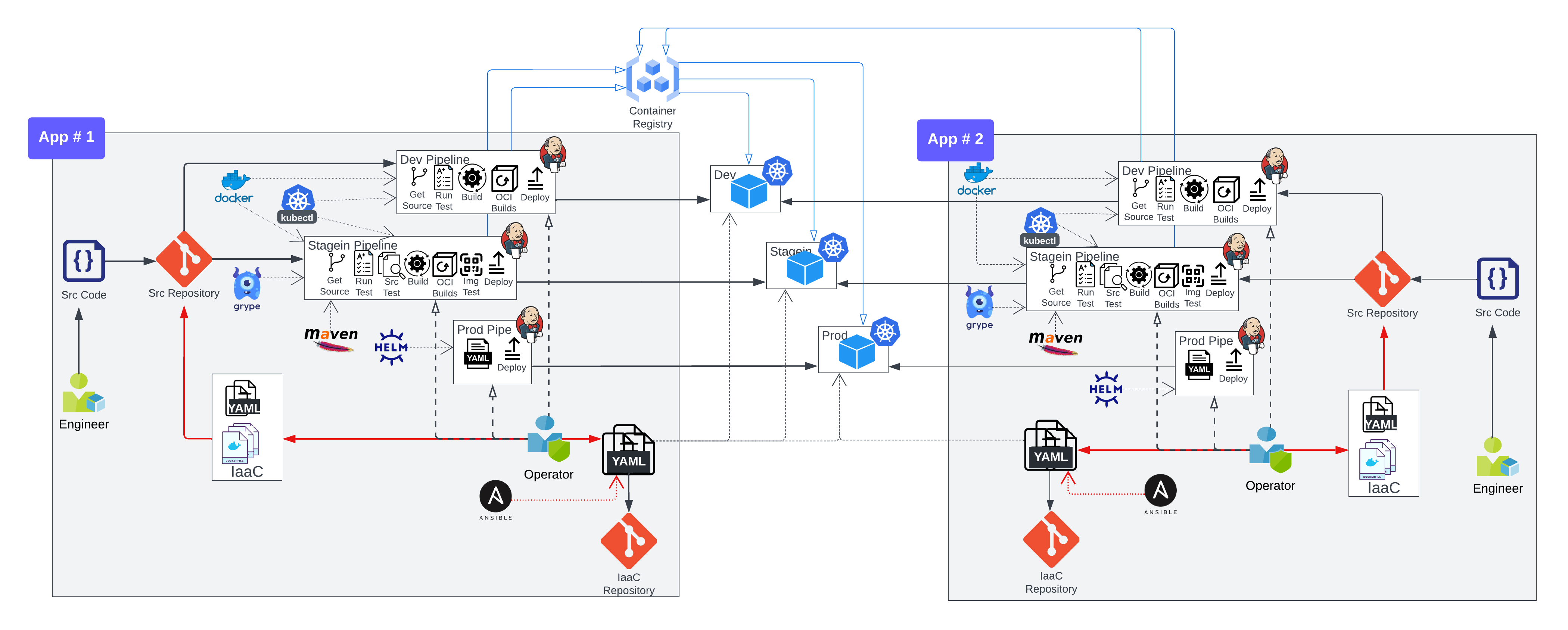

Too Many pipelines: As shown in the diagram that there are already 3 pipeline for 1 source code for just 3 environments. Visibly, with this approach (tightly integrated pipeline with source code repository) each source code will lead to increase of pipelines by factor of 3. This, in my opinion, is a problem in the context of maintenance and manage.

Security: Authentication are needed in a pipeline for various reasons. Common ones are: accessing source code git repository, accessing image registry, accessing K8s cluster for deployment etc. Most pipeline tools (eg: Jenkins) requires secrets to be stored in the tool itself. This may be a security issue because then we need to think about who has access to the pipeline server, how to rotate secrets etc which also hinders scale and raises maintenance concerns.

Below diagram shows increased number of pipelines (by factor of 3) with increasing number of source codes. Now, do the math for the number of pipelines for 20 repositories.

Functional Specs of a source to target environment path:

Let's list out the functional specifications of a source to target environment path and then map the tech or tools accordingly to achieve those functionalities.

Loose coupled source repository and target environment: This way 1:1 integration between repository and pipeline will go away. This will promote re-using paths for source to target environment for multiple source repositories. For example: we will only ever have 3 paths:

- Simple Path: Git Poll → Run Tests → Build Image → Generate Deployment Config → GitOps Push

- Secured Path: Git Poll → Run Tests → Scan Source → Build Image → Scan Image → Generate K8s deployment Config → GitOps Push

- Delivery Path: GitOpsPoll → Apply deployment config

Modularity and Reusability: The tasks that are needed to achieve for source to target environment path need to be modular so that the same task does not need to be redefined or recreated multiple times for different source repositories and different environment. For example: GitPull task should only be defined once and used in all cases for pulling source code from Git repository.

Event Driven: This functionality is needed to achieve true reusability. Sequences makes a series of tasks linier and the order of execution takes precedence. This hinders the reusability element of the collection (steps in a pipeline). But let's re-imagine what is really needed for our source to target environment path: We need a series of tasks to be completed successfully and raise error event on failure. An event driven execution processes should provide rearrange these tasks in any order in different scenarios (and even run them in parallel when needed) promoting re-usability.

Cloud native: The tasks should run in a cloud native manner. This will reduce the overhead of managing the configs and secrets that these tasks need. Also will eliminate the need for servers and associated maintenance. (Ok, with AzureDevOps we do not have to maintain server since it is cloud based. So that's good. But the maintaining secrets, IaC files etc issue still exists). For Example: The GitPoll task needs a secret to authenticate against the private Git Reposity for the polling. Same for the push to image registry. If we can supply them from K8s itself that means we are decoupling the secret management from the CI/CD platform itself and reducing the overhead of managing (eg: access, rotation etc) them.

Remove or Reduce infrastructure codes from application source code: Mixing infra codes with application source code is an anti-pattern. By eliminating this antipattern we also eliminate the issues with handoffs, choke points in Ops process etc issues.

Remove Dockerfile overhead: There are tools available (eg: https://buildpacks.io/) that can generate OCI image by analysing source code. buildpacks.io uses secured base images (thus significantly reduced CVE risk). It can eliminate the need for Dockerfile all together. More to this, it is also capable of "rebase"ing an OCI it generated when CVE is reported. This hugely improves an organisation's ability to respond to a reported threat and release fast with resolved security issue. This is very useful specially from an operation perspective.

Now, interestingly when we try to achieve these functionality with a pipeline approach using traditional/commonly used tools (eg: Jenkins, DevOps, Circle etc) we will notice the gaps or drawbacks.

My opinion here is that Pipelines have been great but it fails to scale SOA or Microservices. Many organisations have started to build their own source to target environment platforms using queues and eventing. The opensource community has also been active in to solve this using cloud native technologies.

To my knowledge, so far I have come across the below ones that can achieve the above in a cloud native way:

- Cartographer (https://cartographer.sh/)

- Tekton (https://tekton.dev/)

- BYO

In this post I will use Cartographer.

Cloud Native Supply Chain using Cartographer:

We saw the diagram for source to production path using pipeline. Now let's see a diagram of achieving the same using Cartographer:

- Cloud Native: It is K8s native. So "tooling server" does not exist in this context reducing the need for server maintenance. Probably also, simplifies creating and managing secrets.

- It is event driven and leverages K8s native eventing capability. (I have explained above why it is an important factor).

- It is Modular and Reusable: By design it is modular and promotes reusability. Each component/task of a CI or CD process is defined using templating concept and the actual object that performs the tasks is dynamically generated on demand from that template and is disposable by default.

- It promoted loose coupling by design: It introduces a concept called SupplyChain (instead of pipeline). Supply Chain essentially functions like a pipeline (combining the components that will perform tasks for source to target environment path) with some major differences like:

- it does not have a tight integration with source code. Rather it picks up based on a selection model (k8s label selector mechanism). Thus, 1 supply chain serves many source repositories that follow same source to target environment path.

- it references the tasks templates and executes the tasks based on event choreography (instead of sequentially orchestrating them). Thus provides features like parallel tasks executions (for speed), rearrange as necessary, change in a template gets reflected in all supply chains etc.

- By using Workload definition it is super simple to switch between a Dev Path and a Prod Path and does not require hand offs.

- Auto generate deployment configs: It promotes GitOps and has templates for define deployment definition. Using the Config Template it can dynamically generate deployment config file for Git Ops. So no need to place deployment definition in source code.

- Dockerfile flexibility: Optionally, using Image Template from cartographer CRD we can generate OCI image using Kpack (k8s native implementation for buildpacks.io). This eliminates the need for placing Dockerfile in the source code and also ensures that same OCI image is generated for all the paths a source code will follow during its lifecycle. If you must use Dockerfile then a more traditional way to building image (eg: docker) or Kanico can also be used.

- Remove or reduce the need for handoffs: Since it is loose coupled from source code and has the ability to dynamically generate deployment config using Cartographer we can eliminate the need for supplying infra definition, approved Dockerfiles etc. So Ops can be completely segregated from Dev. Thus no need for handoffs or acquiring infra codes during code promotion to different environments.

How does Cartographer work?

- FluxCD for pulling from private git repository

- Maven to run tests of Java application

- Kpack for building OCI image

- Grype for scanning

- Tekton for running disposable tasks (eg: run scan, run git-write, run maven)

- Cartographer Templates (of various types) to define tasks to be performed for CI and CD

- Cartographer SupplyChain to reference relevant templates that will perform the relevant tasks.

- Cartographer Workload to define the object containing the source code information (eg: type, selector, git repo information etc) for with a supply chain will perform.

- labels: which tells Cartographer which supply chain to select

- GitUrl+Branch/Tag: which tells Cartographer where to get the source code for processing.

Cartographer Tutorials:

- Yes, it took a bit effort to understand how these things work. David Espejo and Cora Iberkleid explained it in this video pretty well and clearly: https://www.youtube.com/watch?v=Qr-DO0E9R1Y

- Also see this video where Waciuma Wanjohi and Rasheed explaining why Cartographer with a very different view and how to start with Cartographer: https://www.youtube.com/watch?v=TJPGn0-hpPY (It gave me a solid reason why I would even consider Cartographer)

- Once, I understood it I managed to create an interactive UI for it that covers most usecases. You can use this UI to get started quickly.

Here's a video I made demonstrating it.

The interactive UI tool is called Merlin's TAP wizard: https://github.com/alinahid477/tapwizard

It will walk you through with an wizard like UI step by step.

Comments

Post a Comment