Ever since I watched the Young Justice EP-1 the security system of the Hall Of Justice and Mount Justice wow-ed me. After all it was built by Batman. You see similar AI driven voice guided system in pretty much in all sci-fi series these days.

I always dreamed of having something similar of my own. Well, now I have it (sort of).

Although we not quite in the flying cars era yet (disappointment) but IOT powered locks are somewhat normal these days. The adoption rate is great.

Some background: What is this Hall Of Justice Authorisation system?

This is the security system that Batman built for Hall Of Justice. The movies haven't shown it yet but there're several scenes in the animated series and comic books. Basically, it is a AI powered voice guided intelligent security system that scans bio signatures (like retina, body dimensions, temperature, heart rate) through a scanning device and identifies which member of the justice league it is, logs entry then grants access to restricted members only area.

Here's a GIF image of how this system works:

Intriguing right? I know.

Even more interestingly, to do all of the above the technology is already available and I won't be surprised if someone already has it.

What I did

- A bio signatures scanner is complex to build, requires power and hardware, involves several integration points and will look clunky when jumbled together with my skill set and resources available to me -- So No Go.

- I could build a facial recognition device. But again that would require a camera attached to a RPi, somehow I will need to wire to its suitable position to supply power and time to time it will fail (lack of lighting, passed too quick for a low cost small camera). -- No Go Again.

- I do carry a mobile phone and/or wear smart watch. Both of these devices emits bluetooth signal that contains the device signature. Using a scanner I can scan the device and know whose device it is (mine, my wife's or some one else's). I have a Sonos speaker. I have Home Assistant which can act as a conduit for text-to-speach to sonos. -- Yep, this is a GO.

How I did it:

This is how:

- I have the config written in Evernote. The config contains devide unique id (a guid) and corresponding name.

- I wrote a program in Embedded C++ using PIO and deployed it to a ESP32 chipset. This the heart of the operation.

- First, it connects to WIFI after boot.

- Then it reads config from Evernote and keeps in memory

- Then it start scanning. It is also a BLE (Bluetooth low energy) scanner and constantly (with 3 sec sleep time) looks for bluetooth devices within approx 1 metre radius.

- When a device is found with matching ID with config data it sends a REST Payload to Home Assistant with the device Name as a parameter.

- Then it goes to sleep for few seconds to stop scanning the same device. It also keeps the last found id in memory, for the next 15mins which gives the user time to clear out of its scanning area. But if it finds other devices within that 15 mins with matching id/name it will send payload with the name.

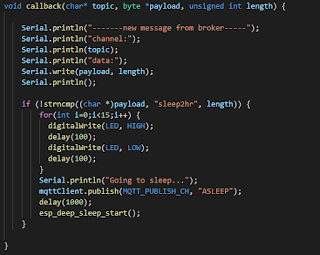

- It is also a MQTT client which is subscribed to a topic from Home Assistant MQTT server. When the message "SLEEP" (usually at night after 10pm) is received to this topic the client executes esp_deep_sleep_start() which causes ESP32 to go to deep sleep mode (as per config esp_sleep_enable_timer_wakeup(TIME_TO_SLEEP_30_MIN)). During this process all memory is wiped and processing completely stops except for in built clock cycle which wakes the ESP32 after the sleep duration. The MQTT client also publishes the ESP32's status time to time so I can see it what state the scanner is at given time. The states are: SLEEP, AWAKE, ERROR.

- I configured Home Assistant as per below for this purpose:

- Configured a custom sensor in the Home Assistant to displays the status of the ESP32 and its last scanned device name.

- I wrote a custom switch (using Boolean variable) which acts and publishes to the MQTT topic. Using this switch I can manually send SLEEP command to ESP32.

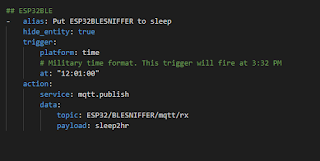

- I wrote an automation that publishes a message SLEEP after 10pm.

- I wrote a TTS (text-to-speach) script to send to Sonos using HA tts with platform google translator. (Sonos is already available to HA using Air U PnP). .

- I wrote a script that gets triggered via REST API by the ESP32 device. This script takes the device name as a parameter. This script then calls the Sonos Say script with the full text "Access Granted. Welcome Batman.

" (Here Batman is the device id passed to HA as parameter). It also publishes the Device name to the MQTT topic to display on the UI. - HA TTS converts into audio wav format trigger play to Sonos speaker over wifi with 50% volume.

- I plugged in the ESP32 device using a USB power supply in my garage near the garage door. Luckily my main entry door is on the other side of the wall.

The Result

- When I enter (or near by meaning about to enter) using the entry door or garage door the ESP32 finds the BLE signature emitting from my phone or watch. Most of the time finds the watch.

- Plays "Access Granted, Welcome Batman!" on Sonos speaker.

- The scanner sleeps at night and wakes up in the morning. Or manually made to sleep (in the case when I am out at work or on holiday). So mimicking a security system turn off or on or locked.

Limitation

A limitation it currently has is in order for the device to emit BLE signal the device needs to be awake/alive. For iPhone or iWatch if the screen is off it means the device is not emitting BLE signals. When a notification is received the device (iPhone, iWatch) screen turns on meaning at that time the device is emitting BLE signal.For iWatch it is quite natural. For example: I am driving into my drive way which due to hand gesture causes iWatch to be awake and ESP32 scanner finds it. Very similar happens with iPhone 10 which my wife uses. So not an issue when user is wearing iWatch and/or using iPhone 10.

Unfortunately, currently I am using iPhone 8. Which doesn't get awaken with movement. So when I am carrying only iPhone 8 in my pocket the ESP32 does not find the device and nothing happens.

Future enhancement

This is just a start. I plan to turn it into an actual lock/unlock functionality. Below is what I have in mind.- The script in Home Assistant can easily be extended to fire the garage door button. This will do a real unlock.

- When I am near by (within 500cm) and incoming from outside the HA will send a notification using proximity. Which will also cause the phone state to be awake and ESP32 will find the device to perform greeting + unlock doors. Not a hard problem to solve and easily do-able.

Demo Video

"Hai, if it didn't happen on camera it didnt happen at all". So here's the demo of my JL Auth Greeting System:Thank You

Some highlights photos gallery:

Loading embedded code onto ESP32 (so easy)

Platform IO - Super impressed. Light weight, runs on code, intellisence -- what more do you want.

A super important config to avoid flush error. Wasted half a day to figure this out.

Plugged in and working.

The Home Assistant Script behind the voice guided greeting

The MQTT callback (for a subscribed topic) that never worked :(

The MQTT publish from Home Assistant that worked but never got actioned :(

Comments

Post a Comment